Related Tags

“We could spend years trying to understand them on on a physics level, or we could automate it”: Neural DSP’s Doug Castro on how machine learning can surpass human understanding of amplifiers

The man behind the revolutionary AI-driven Quad Cortex floor modeller on what it’s like to peek into the black box.

Quad Cortex

For the head of a company that’s literally named after neural networks, Doug Castro – the founder of Neural DSP – has a somewhat surprising approach to the tech. “Our mantra at Neural is that we should only use AI when everything else has been ruled out,” he tells us over Zoom, from the company’s head office in Finland (we’d be remiss, also, not to mention the illuminated Neural DSP logo behind him). So how did we get here, where Neural DSP’s Quad Cortex is one of the most powerful hardware modellers out there, all thanks to AI?

It’s been said plenty of times that digital modelling has made huge progress in recent years. The Quad Cortex has been a large part of that, leveraging machine learning (and the kind of processing power engineers in the 2000s could have only dreamed of) to create some truly excellent guitar sounds. But, despite the company’s name, relying on neural nets and AI wasn’t always the overarching game plan.

“Those technologies are so hyped at the moment,” Castro says. “A lot of people are trying to force it into working for problems that might be solved by more conventional methods. It’s not actually a good solution for a lot of things.”

While AI wasn’t always the plan, the Quad Cortex was. But the road to the powerful hardware modeller was always going to be long, as evidenced by the fact that the Quad Cortex arrived in 2021, four years after Neural’s founding. “We started the company to build it,” Doug tells us, “ and soon, we realised it would be a lot of hardware development, manufacturing, UX design, and on top of all that, a ton of algorithm research.”

Here, Doug saw an opportunity: “We realised that we had to do all of that DSP work anyway, so we started making plugins right away. That helped us bring in money, and establish the brand – and made sure we set things up for the Quad Cortex to be a success.”

At first, the plugins were being built the old-fashioned way, without machine learning. But the limitations of that approach were soon evident. “We realised it took one of our super-brilliant, world-class PhDs around seven weeks to model an amplifier. Forget about any delays, reverbs, cab simulation, all of that. One amp – seven weeks. And to find enough people that were really good at conventional amp modelling was going to be very hard – there are very few people who understand it from top to bottom – you need to really understand analogue electronics, and DSP algorithms, turning the analogue behaviour into equations, and then you need to be really good at programming so they actually write code that’s efficient and run the model in real time. Finding one person who can do all that? That’s really hard.”

“So that’s why there are very few companies that are doing amp modelling at a super-high level,” Doug adds. “There aren’t enough smart people working on it. Super smart people usually go into other areas that are far more profitable than, you know, modelling amplifiers…”

Doug also notes that hiring enough engineers and programmers to pull off creating the Quad Cortex in just four years was clearly going to be practically financially impossible. And so, as per Neural’s mantra, the odds for the conventional approach working were zero, so it was time to bring out the big guns. “So we thought, if we could automate the development with machine learning, then the odds are still really bad – but a non-zero number! Five per cent might sound horrible, but it’s much better than zero.”

Never tell me the odds

So instead of recruiting engineers for a traditional amp modelling approach, Neural DSP got started on plan B: “finding world-class machine learning people who also played music, and wanted to work on amps.”

“We were lucky enough to run into some really talented people from our local engineering university [Finland’s Aalto University], it has a great audio DSP programme, and some legendary professors. I happened to meet a few guys from the research team who we brought on. I’d told them about this idea, and they actually showed me that they were already working on some machine-learning related modelling. It was a miracle that we met!”

While Doug is dismissive of the idea that neural networks are an instant solution for every problem, it’s hard not to see them as just a little bit magical. “The benefit of machine learning is that with five people, you can do work that could take hundreds of people to do,” Doug says. “We’re always working on the internal tooling and making it more efficient, so it’s not in its final form, but we’re at the point where six machine learning engineers can probably do the work of 10 or 20 people. We want to get it to 10 times, in terms of efficiency. It’s getting there, but there’s still a lot of work to be done.”

As well as efficiency, Doug states that machine learning has helped Neural DSP create incredibly accurate amplifier models. “There’s a lot of things that happen in a tube amplifier that are not really well understood. There’s a lot that we do know, what the tubes are doing, saturation curves and all that – that’s really well documented. But in the later stages of the amplifier, what happens with the interaction between the power supply, the pentodes, the output transformer and the reactive load of the speaker, there’s a lot of weird things that happen there – it’s not really well understood.

“Traditionally, modelling something you understand extremely well is already extremely difficult, and then modelling something you don’t understand fully is really, really hard. I think that’s why many amp models that sound really close do not feel really close, because there’s that 5 per cent that isn’t modelled right because we just don’t understand everything about how the amp is behaving.”

Into the void

The way neural networks function presents a unique shortcut around the problem of modelling something that’s not quite understood. Put very simply, a network is given an input and an output, and then is trained on how to turn one into the other. It doesn’t need to know what’s happening inside the transformer and so on, it just needs to know what goes in, and what comes out. “We realised that we could spend years trying to understand what’s going on on a physics level,” Doug says, “or we could automate it. We just run signals through an amp – we don’t need to understand the effect of each part of an amplifier. If it has an impact on the signal, we should be able to recreate it. So that’s the advantage of black-box modelling like this.”

The ‘black box’, in the world of machine learning, refers to the main side-effect of a program effectively writing itself – the logic is often impossible for a human programmer to parse. This effect is more acute with higher-level algorithms in a later stage of their training (“there’s not a human alive that could tell you how Google’s algorithm works,” Doug notes), but it’s not always an inherent aspect of machine learning.

We ask Doug if it’s ever possible to crack the lid on the black box and make changes if you need to. “We do probe inside, a lot. For the black-box modelling to be reliable, you start from a sort of ‘light grey’ approach – that way you can verify things, test things out early. We don’t have a rule that we never open the algorithm – if something weird is going on, then by all means, we open it.”

But, ultimately, how the code translates guitar input to a tone is more opaque. “You can get the desired output every time, but what it’s doing internally is too complex for anyone to understand. Some of them are self-writing code portions in their own code: part of the code of the model writes itself, which is pretty trippy,” Doug says.

Doug doesn’t share the specifics of how the models are actually trained, but he gives us a picture of the kind of processing time and power it takes. “We record signals for a few hours, and then we train the model on those – the training time is going down, we have a huge server in our storage room which we’re using at the moment, but soon we’re going to move it to cloud processing. On our own servers, it would be four to six hours of recording, and then eight hours of training. We’ll be able to cut down the training time significantly with the cloud.”

Open the pedalboard doors, HAL

Just before our chat with Doug, AI had hit the headlines for slightly surreal reasons. An engineer at Google had posted a transcript of his conversation with LaMDA – Google’s AI chatbot – and claimed that the program had achieved sentience. Obviously, the story had the power to create some rather sensational headlines – but Doug and the Neural DSP team were less caught up in the sci-fi hype.

“I read that the transcript was quite edited. And the moment something like that is edited, you know, it can be manipulated to evoke emotions in the reader. Half of our machine learning team come from audio, the other half are more involved with speech synthesis, a much more conventional use for machine learning. And there’s a neuroscientist in the team. I noticed that none of these people were that impressed with the transcript, to be honest. If it had been a breakthrough, I think we wouldn’t have heard about it through a blog post.”

So AI might not be truly sentient just yet – but there are some very human things that it can partake in, such as composition. “Already there’s a lot of amazing tools for assisted composition, so if you’re stuck, they help you by suggesting how you should continue.” We’re curious as to whether Neural DSP might dip its toes into this area. Doug is optimistic, but cautious about biting off more than he can chew. “Just because you can do one thing, it doesn’t mean that you can all of a sudden scale indefinitely – complexity tends to grow exponentially. but it’s possible, if you’ve got a framework you can expand on.”

While compositional tools might not be on the immediate horizon, there is another area Doug sees much more potential for Neural’s participation in: production. “Having machine learning tools that help de-skill that process will be really important. Plugins have already democratised recording, as you don’t need a super fancy studio. Grammys have been won with a decent laptop, an interface and a few hundred dollars worth of plugins.

“Now you don’t have to rely on anyone, any labels – you can be in control of your own music. But there’s the other aspect missing – for a true revolution, we need to reduce the amount of skill required. That hasn’t changed much in the last 30 years, you still need to know a lot about recording, mixing, mastering – it’s a whole different set of skills, and if you’ve been playing guitar for 10 years you need another 10 to truly learn production.”

So what does Neural’s involvement in production look like? “Something like what we already do with amp modelling – just vastly bigger. It’s a daunting task – the wall of scaling up looks pretty high, but we’re definitely doing research on it.

“A lot of people know what they want: they have the idea for their song in their head already, they just need a way to record it that sounds great without having to pay someone 500 bucks a track to mix it, and more to get it mastered and so on. If you can remove that pain and that cost, and offer the same thing for orders of magnitude less of the price – that’s where there’s a lot of potential.”

Branching out into production will perhaps offer Neural DSP some more room to innovate than the potentially restrictive world of guitar tones. “I mean, a Marshall is a Marshall, and it’s been a Marshall for more than 50 years,” Doug says. “How can you innovate that? People want a more convenient way to get that sound, but they don’t want you to reinvent it. With some trippier effects there’s some room to grow but still: you will not reinvent the reverb or the delay or the compressor.”

But, just because Neural DSP didn’t need to reinvent the wheel, sonically speaking, it didn’t mean the Quad Cortex was just another hardware modeller. “The biggest room for improvement was in the user interface,” Doug tells us. “We couldn’t make something that sounds ten times as good as a Universal Audio or Fractal, they’re already very good, but in how easy it is to use – we found there was room for improvement. Sound wise, of course, I think the QC is the best – but, well, I’m biased. It’s my baby!”

Confidence is key

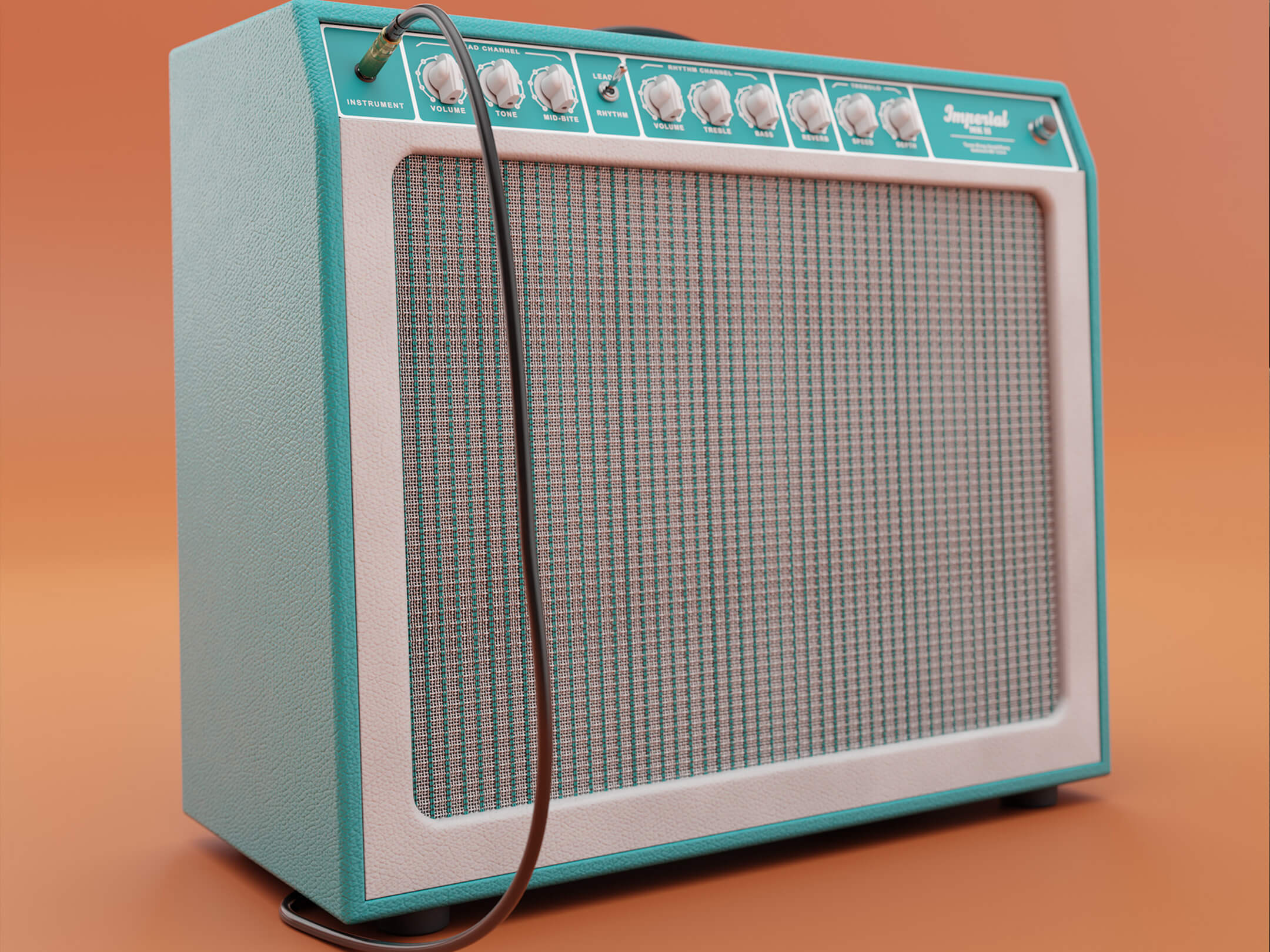

While modelling technology has become more and more accessible, it’s still been most widely adopted by players of a certain style – modern metal suits the tight, controlled sounds it can conjure up. But Doug is optimistic about Neural DSP’s plans to expand its technology to more traditional sounds – the brand has already partnered with Tone King for amplifier plugin, as well funk wizard Cory Wong for an exceedingly perhaps the cleanest entry into the Archetype plugin range.

“I think we have a lot to offer to people who, maybe we wouldn’t be the first thing that comes to their mind when it comes to a solution for their guitar sound. I mean, the technology involved truly works for all styles, and even beyond guitar as well. So we’ve been venturing into new styles of music with new Archetype plugins, and partnering with brands that make amplifiers that aren’t heavy stuff.”

“For me, I’m a heavy guy at heart, I listen to a lot of stuff but 70 per cent of what I listen to has really heavily distorted guitars and bass. So we’ll always do the heavy things,” Doug adds – this should be no surprise if you’ve ever browsed Neural’s plugin catalogue, loaded with signature Archetype plugins for exemplary heavy artists from Gojira to Tosin Abasi to Polyphia. There are also digital recreations of some truly crushing amps, such as the Soldano SLO-100.

We ask Doug what he’s listening to right now – he mentions he’s been obsessed with solo project Cloudkicker, and has been learning guitar (Doug is primarily a bassist) by playing his tracks. But he also has a standard rotation of heavy classics: “My usuals are Korn, Gojira, Meshuggah, Dream Theater, Animals As Leaders. I’m still rocking those albums. A lot of Polyphia, too. I feel like I’m doing product placement because I’m naming people we have products with – but I love their music. Before working with them I’ve been a fan of them, and then suddenly we’re business partners, it’s a really cool thing.

“I think Polyphia are in a class of their own – their music is so beautiful, and also the biggest flex possible. I was listening to Playing God the other day, and my three-year-old daughter – she hates heavy stuff – she loved it. She was like, ‘play it again’, six times! So if you can make stuff that a hardened metalhead like me lives and a three year old that hates distorted guitars loves, you’re onto something.

But not everyone is seeking the same modern-math-djent perfection as Polyphia. “There are a lot of people who don’t want a 5150, they want a dumble, a tone king, or something like that. So we want to make sure we do offer enough in our catalogue for those who play all sorts of styles.”

We’re curious as to how Neural intends to address the biases of more traditional players – it’s undeniable that for the most fervent seekers of old-school guitar sound, you’d be more likely to convince them to plug their guitar into a live electrical socket than you would a digital modeller. “There’s a certain percentage of people who will never use digital stuff, and that’s fine,” Doug says, “but that number is decreasing a lot. And that’s the nature of the technology – we have better and better products out each year, and the costs are going down.

“10 years ago you couldn’t get a world-class guitar tone from a $100 plugin – it just didn’t exist. And until Fractal Audio came out, you couldn’t get it in a hardware unit either – I think Fractal changed the game in that regard, and opened the way for the Kemper and the Helix and so on.”

The future of the future

So what’s next for Neural DSP? “At the moment, we’re really focused on continuing to release amazing plugins, and making the QC the best device of its kind. We’re growing the team so we can do more, and do it faster. There are still things we need to accomplish with the QC before we step back and do other things. It’s not just the device, it’s the app, the ecosystem. There’s always research and ideas, but it’s all at a super-early stage.”

It’s clear that, despite his resolve to continue working on the Quad Cortex, it being a real, tangible product is slightly surreal for Doug. “I have some early drawings from the 2010s – pen and paper. I’ve been thinking about this for like, eight years. For two years after the first idea, it became this burning obsession to the point where we had to start another company to actually do it. For a while, I was running Darkglass and Neural at the same time. It was gruelling. But for that time, I was only thinking about one thing, and that was making the QC happen.

“For the last four or five years, there’s been nothing else on my mind – nothing else mattered other than making this happen. Every book I read, every phone call, it was all about how we can actually make sure that this device exists at some point, and that we’re the ones to do it. That’s a very, very intense way to live your life. With a single point of focus. You can go crazy sometimes, and we thought maybe we’d be able to let off the gas a bit after it came out. But of course, there’s other requirements, and people have expectations – in a way, it intensifies once it’s out.”

“The psychological implications of running a company like Neural, one that’s growing all the time, are tough – most of the time you spend you spend in ‘existential crisis’ mode, with a burning obsession at your core.”

But the hard work has paid off – the Quad Cortex is real, and despite the tough odds of a small, rag-tag group of AI engineers standing toe-to-toe with some of the biggest audio companies on the planet, they did it, with the help of whatever’s inside that ever-elusive black box.

We ask: will the Quad Cortex ever truly be finished? “Perhaps at some point,” Doug responds. “But it’s hard for me to see that happening anytime soon!”

For more information about Neural DSP, click here.